Facebook has been in the public eye in recent months due to privacy issues and mismanagement of user information, something that has not been easy and that has created a kind of distrust in the work that makes Mark Zuckerberg within his social network.

Well, Zuck wants to clean up his image and that of Facebook, and after the recent chaos, he promised there would be changes in the short and medium term. This is how today the company is publishing its first transparency report, which is based on the effectiveness of its new guidelines for eliminating inappropriate and prohibited content within the community, with which it seeks to gain that trust again and in the process demonstrate that yes they are working on eliminating that type of content.

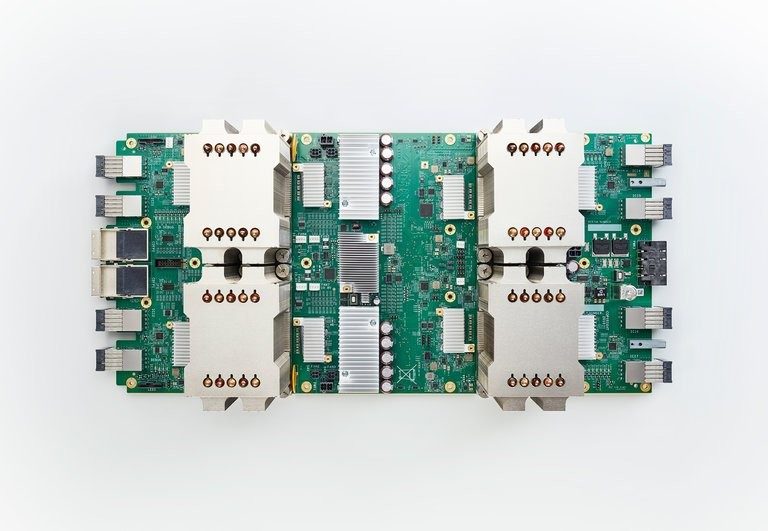

But most attractive of all, is that most of this work is carried out using automated tools of artificial intelligence.

1300 million false Facebook accounts eliminated

The report contains interesting details about the actions that Facebook is carrying out, where artificial intelligence plays a very important role. For example, the company claims that during the first quarter of 2018, its moderator based on artificial intelligence, detected and eliminated most publications that included violence, pornography and terrorism.

Facebook explains that its moderation efforts are based on six main areas: graphic violence, pornography, terrorist propaganda, hate speech, spam and fake accounts. The report details how much of that content was seen by users, how much was deleted and how much was eliminated before it was reported by a user.

The figures show that during the first of this 2018, Facebook eliminated 837 million messages of spam, 21 million publications with nudity or sexual activity, 3.5 million messages that showed violent content, 2.5 million messages with speeches of hate and 1.9 million terrorist content. In addition, the company claims to have eliminated 583 million false accounts, which added to those eliminated during the last quarter of 2017, we are talking about almost 1,300 million false accounts disabled.

In the case of fake accounts, Facebook mentions that many of them were bots whose purpose was to spread spam and carry out illicit activities. Likewise, the company assures that the majority of these accounts “were disabled a few minutes after they were created”. However, Facebook accepted that not all false accounts are eliminated, and estimates that between 3 and 4% of its active users are false, but they do not represent a risk by not doing illicit activities.

The difficult role of artificial intelligence as a moderator of content

Facebook ensures that its automated system based on artificial intelligence has been decisive in this task, since this has allowed them to act before a user makes the report. For example, the company says that its system managed to detect almost 100% of spam and terrorist propaganda, almost 99% of false accounts and close to 96% of pornography. In the case of publications with messages that incited violence, Facebook says that their system detected 86%.

But this content-moderated artificial intelligence is not perfect, because when it comes to messages with hate speech it is when it encounters its biggest problems. During the first quarter of 2018, the system only managed to detect 38% of messages with these characteristics, the rest came from user reports.

Facebook explains that the effectiveness of its artificial intelligence system is due to an improvement in technology and processes in the detection of the content of the photos, which is what has allowed them to be so efficient in cases of terrorism and pornography. But when we are facing messages, especially written ones, where there are signs of hate speech, it is when the system fails to determine and what is and what is not, this because we fall within the terrain of subjectivity.

Imagine that a person expresses his opinion about a topic, and another person, or several, believe that it is hate speech. The former may consider that he is making effective his right to freedom of expression, and if his message is erased and he receives a warning, this person might think that it is censorship.

This is where Facebook would have to be clearer in its rules and define well what it considers “hate speech”, since there are clear signs when talking about certain topics, but in cases where subjective opinions are mixed and not It’s about a direct attack, it’s when they’re inside that thin line. If this is complicated for humans, imagine how artificial intelligence will work.

Alex Schultz, vice president of analytics at Facebook, said: “Hate speech is really difficult, there are nuances, there is context, technology is simply not ready to really understand all this, much less if it is a long, long list. of languages”.

For his part, Mark Zuckerberg commented : “We have much more work to do, the AI still needs to improve before we can use it to effectively eliminate problems where there are linguistic nuances, such as hate speech in different languages, but we are working on it. While we do it, we hope to share our progress.”

The transparency report of Facebook will be published quarterly and will help us to know the actions of the company in these complex issues, which obviously will also serve to show that they are doing something about it and thus try to clean up their image.

+ There are no comments

Add yours